Why do pollsters keep getting it wrong?

Polling has repeatedly failed to capture the depth and breadth of populist dissent. 2020 was no different.

After a long wait, it became clear at the end of last week that Joe Biden will be the next president of the United States.

Some states, incredibly, have not finished counting their votes. There is also at least one recount to come – in Georgia – and there may be more to follow. But at the time of writing, CNN has Biden on 279 Electoral College votes to Donald Trump’s 214, with 45 points still to be allocated. If the margins in undeclared states stay as they are, the final tally will be 306 to 232 in Biden’s favour.

Over the weekend, as Biden crept towards the all-important 270 mark, Democrats celebrated in the streets. They expected to win, and they did. But the Blue Wave many anticipated did not materialise. Though almost all the polls predicted a Democrat victory, they failed to anticipate how closely run things would be.

Pollsters got things spectacularly wrong in 2016, giving almost no credence to the idea that Trump might beat Hillary Clinton. And in 2020, though they made the right call in backing Biden, they greatly underestimated the strength of support for Trump once again. Why do they keep getting it wrong?

Let’s look at what some of them actually said this time round. FiveThirtyEight, run by polling guru Nate Silver, conducted modelling which simulated the election 40,000 times to determine the likely winners. In a sample of 100 such outcomes presented on election day, Biden won 89, and Trump 10 (one outcome was a tie). In nearly 90 per cent of scenarios, Biden would win out.

For the Senate races, FiveThirtyEight found that in 75 of 100 scenarios, the Democrats won overall control, while the Republicans won in only 25 cases. In reality, the Democrats have taken only one seat from the Republicans and, as FiveThirtyEight now admits, they are unlikely to flip the Senate.

The Economist was similarly confident prior to the vote, giving Trump just a three per cent chance of winning. In the event, he came a lot closer than this would imply.

YouGov also expected a big win for the Democrats, predicting 364 Electoral College votes for the Biden and 174 for Trump, as well as an 8.9 per cent popular vote margin between Biden and Trump. Currently, the actual margin is three per cent.

YouGov’s Multilevel Regression and Poststratification (MRP) model looked at a wide range of data, including national and local polling as well as various other informational inputs. Marcus Roberts, YouGov’s director of international projects, sounded fairly confident when speaking to the Spectator. He said the MRP represented the organisation’s ‘best and final call’ about the election, and, ‘as an industry, we really think we’ve cracked the mainstay of the problem that led to the polling misjudgement at the battleground state level in 2016’.

But for all the complex models the industry produced, they left the problem largely un-cracked. It turns out that, regardless of how much colour-coding you put on your models, and no matter how many sums you do, if the data you feed in is inaccurate, you will end up with inaccurate results.

Pollsters have clearly not learned the lessons. Nate Silver of FiveThirtyEight published an article four days after the vote entitled ‘Biden won – pretty convincingly in the end’. But as Freddie Sayers of UnHerd has pointed out, 25,000 votes going the other way in the right places would have swung the whole race. In this context, can we really call the Democrats’ victory a ‘pretty convincing’ win? When over 150million people voted in this election, 25,000 out of 150million is just 0.017 per cent of voters – a tiny margin.

True, Biden won the most votes of any candidate in history. True, he outdid Trump in the popular vote. But it should be obvious by now that this does not automatically translate into a clear win. For better or worse (worse, in spiked’s view) it is the Electoral College that decides who gets the keys to the White House – and the numbers there could easily have been very different.

So what are the lessons that need to be learned? Before the election, I drew attention to the problem of ‘shy’ Trump voters – Republicans and Trump supporters who were unlikely to tell the pollsters how they truly intended to vote. One pollster, Trafalgar Group, led by Robert Cahaly, attempted to account for ‘social desirability bias’ in its polling – the tendency for people not to admit who they are voting for due to a fear of being judged. In the event, Trafalgar got the result wrong, too, predicting an easy Trump victory. Nevertheless, it was right to try to bring these voters into the polling equation. Both the popular vote and Electoral College results were closer than many pollsters expected, and these voters were a key part of the reason why.

Other pollsters were dismissive of the phenomenon. Back in October, two ‘public-opinion specialists’ claimed in the Los Angeles Times that they had ‘found no evidence of hidden Trump supporters this time around’. They cited a CNN poll in which only three per cent of respondents failed to state their vote intention. They also referenced work by Reality Check Insights – which one of the article’s authors co-founded – that tried to get around the ‘shy’ Trump issue by asking survey respondents which candidate they considered to be most ‘energetic’. This subjective measure was supposed to ‘signal a voter’s underlying vote intention even if they are still deliberating internally’. Trump did not see a ‘boost’ on this question.

Similarly, on the eve of the election, YouGov ran the headline, ‘Trump voters no more shy than Biden voters’. FiveThirtyEight also claimed that ‘Trump supporters aren’t “shy”’. It acknowledged that some Trump voters might be missed by the polls, but said there was ‘scant evidence’ for the ‘shy Trump’ theory. It highlighted a survey by Morning Consult which found that Trump performed at the same level whether interviews were conducted by phone or by the more anonymous method of online polls.

But Freddie Sayers has raised another important question: the degree to which pollsters can actually access the kinds of people who are more likely to vote for Trump. Perhaps it is not just a question of the way people respond to polls, but whether they respond at all.

Indeed, the disconnect between pollsters and voters is stark. Pollsters are part of the political establishment whose biases are predictable. But it is not these people who decide elections – it is the vast masses of ordinary people, and their intentions were harder to determine. Pollsters still appear not to understand how vast swathes of the country live, think and vote.

The 2020 election results may prove to be an even more fatal blow to pollsters’ credibility than 2016. They called the right winner, but once again misunderstood or simply missed some of the most powerful forces at work in society. As Brendan O’Neill has argued on spiked, despite elites’ predictions – and many commentators’ hopes – populism was not soundly rejected by American voters. Like the broader elites they are part of, the pollsters need to adapt to the reality of this new phenomenon quickly if they are to salvage what remains of their reputations. But for now, the best election prediction anyone can make is that the pollsters will get it wrong.

Paddy Hannam is a spiked intern. Follow him on Twitter: @paddyhannam.

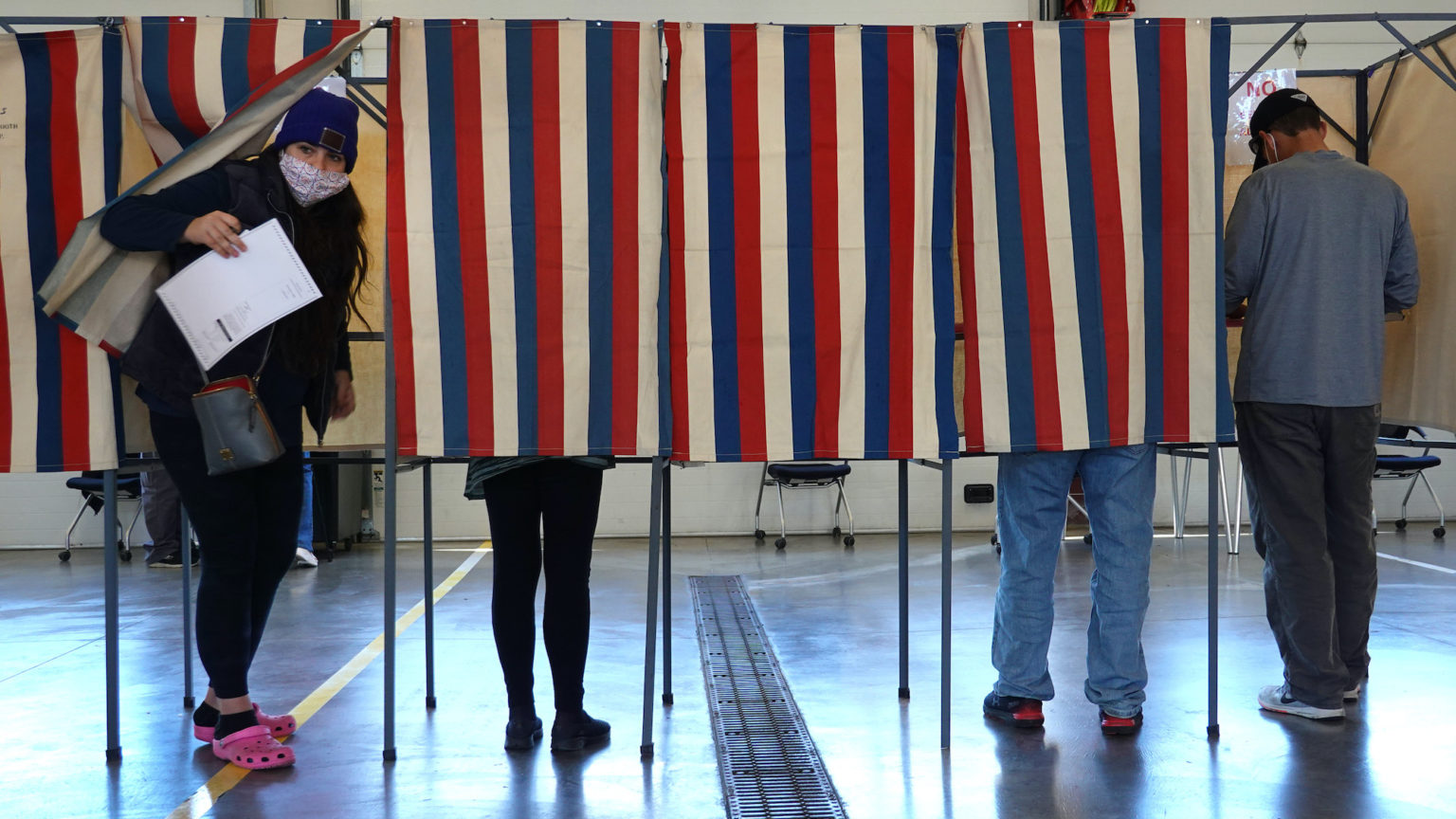

Picture by: Getty.

To enquire about republishing spiked’s content, a right to reply or to request a correction, please contact the managing editor, Viv Regan.

Comments

Want to join the conversation?

Only spiked supporters and patrons, who donate regularly to us, can comment on our articles.