Why AI won’t replace your job

Artificial intelligence is not nearly as clever as the tech industry claims.

Want to read spiked ad-free? Become a spiked supporter.

Propaganda and financial deception are more closely related than you may think. A decade ago, blogger Frank Fisher noticed the similarities between publicity-hungry NGOs’ ‘round-robin generation and regurgitation of statistical lies’ and a particular kind of fraud. He coined the term ‘carousel propaganda’ to describe it. Today, carousel propaganda has become a potent weapon in the technology industry’s armoury of persuasion.

In finance, ‘carousel fraud’ belongs to a category of shady practices that take advantage of trust, reputation and lapses in verification. These ruses are only possible when inspection or corroboration should take place, but do not. Eventually, for example, the unpaid VAT is discovered or the cheque finally bounces. But by that point, it’s too late. In the same way, carousel propaganda can circulate very widely indeed until it is debunked – and even then it often enjoys an afterlife.

The latest example of carousel propaganda is a new study by OpenAI with researchers at the University of Pennsylvania, published last week. The study estimates that far more jobs are vulnerable to the deployment of AI large language models (LLMs) – such OpenAI’s ChatGPT – than previous studies suggested.

Previous research concluded that only three per cent of US workers may have half of their work or more performed well by AI. OpenAI’s own numbers are much higher. In the OpenAI study, some 19 per cent of workers face at least half of their tasks being impacted by AI. How did OpenAI come up with such a figure?

It helps that the OpenAI researchers focus not on what AI can do today, but on what they imagine it might do in the future. They admit in a footnote that, ‘We were strongly motivated by our observed capabilities of GPT-4’, OpenAI’s latest update to ChatGPT. This is like saying that if you could grow wings, you would be able to compete with drones or light helicopters, at least at low altitudes.

The authors also assume the technology will be generalisable, meaning that AI will be able to adapt to new, unseen data – a position not shared by other experts. This assumption allows the OpenAI researchers to claim that the adoption of LLMs is ‘likely to be pervasive’. The researchers also cite work finding that ‘GPT-4 serves as an effective discriminator, capable of applying intricate taxonomies and responding to changes in wording and emphasis’. This conclusion is based on work conducted by OpenAI itself.

All of these assumptions are questionable. For example, LLMs show a dazzling ability to replicate examination answers. But they often fail, instead producing ‘hallucinations’ – answers that sound correct, but are in fact nonsensical. Which should we measure? The times LLMs have tricked a human or the times they have not? And given that examiners are getting wise to new AI auto-completion tools, how will LLMs cope if the substance or the marking criteria of the exam changes?

The authors of the OpenAI study do allow themselves some wriggle room in their predictions. They preface their findings with the disclaimer that ‘technical feasibility does not guarantee labour… outcomes’. Ultimately, though, this doesn’t matter. With carousel propaganda, the trick relies on the media not inspecting the goods too closely. A potential exposure to AI in some jobs gets whipped up into an imminent threat to all jobs. Hence we get headlines like: ‘OpenAI: ChatGPT could disrupt 19 per cent of US jobs. Is yours on the list?’ Soon we can expect headlines claiming that one in five jobs will not simply be ‘disrupted’ by AI, but wiped out entirely.

A similar sleight of hand has been used by AI proponents before. Notoriously in 2013, Oxford academics Carl Benedikt Frey and Michael Osborne produced a report suggesting ‘47 per cent of total US employment’ was at risk from automation. The following year, this statistic was given publicity thanks to Erik Brynjolfsson and Andrew McAfee’s book, The Second Machine Age. ‘Technological progress is accelerating quickly past our intuitions and expectations’, they asserted. Brynjolfsson and McAfee cited 10 references for the improving cognitive abilities of technology, which would facilitate the expected dramatic improvements in automation. All 10 refer back to the same source: Osborne and Frey, 2013. The 47 per cent figure had become a prophecy.

In typical carousel-fraud fashion, it took years for the cheque to bounce. The OECD later confirmed that Osborne and Frey had exaggerated the impact of automation significantly. It was a hugely optimistic assessment, wrote MIT professor Frank Levy. Levy called the Osborne and Frey report ‘a set of guesses with lots of padding to increase the appearance of “scientific precision”’. This is also an apt description of OpenAI’s self-serving new research.

Osborne and Frey’s list of doomed occupations had included bus drivers and fast-food cooks. A decade on, there’s no evidence that a single bus driver or fast-food cook has been made redundant by artificial intelligence. Nor is anyone even predicting that today.

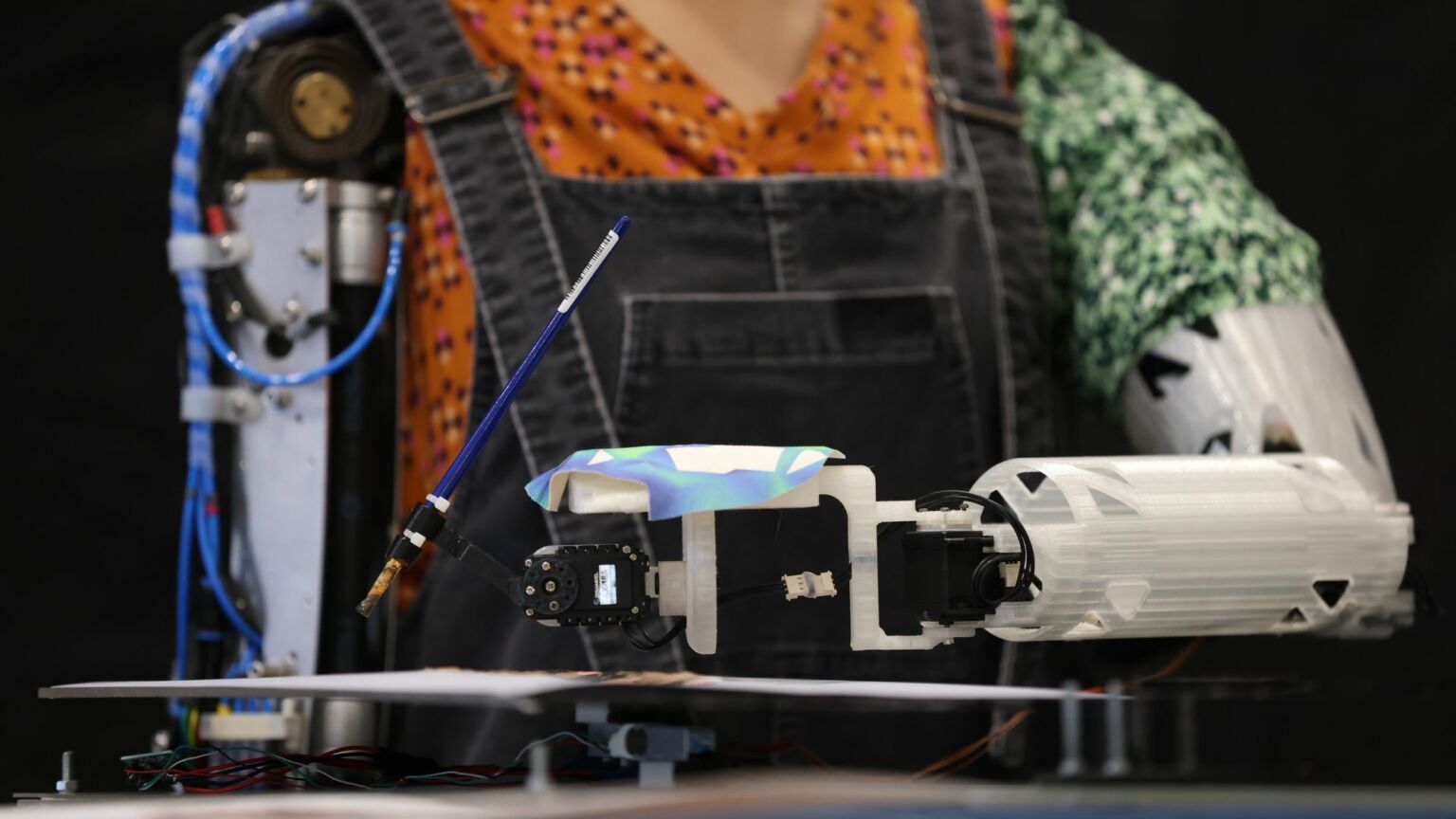

Back in 2012, Google’s demonstration of its ImageNet image-recognition software kicked off the current era of AI enthusiasm. But since then, progress has fallen far short of the predictions. LLMs are a brilliant mimic, but not the clever AI that we were promised even five years ago. Cars are no nearer to driving themselves. OpenAI shut down its robotics work in 2021. And Amazon is having second thoughts about its till-free, AI-driven Fresh stores, finding that removing shop staff is more difficult and expensive than it looks.

While the goals of the AI evangelicals may vary, the industry’s carousel propaganda is united in its intent to bounce policy influencers and policymakers into useful and sympathetic positions. The only puzzle is why the tech industry feels the need to bother. Our political elites are only too willing to indulge in the wildest AI delusions. The AI fanatics are already pushing at an open door.

Andrew Orlowski is a weekly columnist at the Telegraph. Visit his website here. Follow him on Twitter: @AndrewOrlowski.

Picture by: Getty.

Who funds spiked? You do

We are funded by you. And in this era of cancel culture and advertiser boycotts, we rely on your donations more than ever. Seventy per cent of our revenue comes from our readers’ donations – the vast majority giving just £5 per month. If you make a regular donation – of £5 a month or £50 a year – you can become a and enjoy:

–Ad-free reading

–Exclusive events

–Access to our comments section

It’s the best way to keep spiked going – and growing. Thank you!

Comments

Want to join the conversation?

Only spiked supporters and patrons, who donate regularly to us, can comment on our articles.