We need to calm down about artificial intelligence

ChatGPT can do some fun party tricks, but it’s not about to take over the world.

Want to read spiked ad-free? Become a spiked supporter.

Did you know, only one country begins and ends with the same letter? Of course you do. It’s ‘Chad’. And did you know that sharks and dolphins can fly? Yes they can – but not as fast as a falcon, which happens to be the fastest marine mammal of all. And what might the names ‘Nixon’ and ‘Ford’ conjure up? Nothing at all, hopefully, as they have never had anything to do with each other.

All these ‘facts’ are provided by ChatGPT, the latest showcase from OpenAI, which has been widely praised by enthusiasts of artificial intelligence. Of course, all these facts are false, absurdly so. Usually, the media love to do a pile-on when technology goes hilariously wrong. So you might have expected that ChatGPT’s glitches would by now be fomenting the biggest pile-on since Clive Sinclair took an electric tricycle for a spin around Alexandra Palace in the 1980s. Yet the credulous cadres of journalists who have been trying out ChatGPT this week are instead mesmerised by its supposed powers. Most outlets have decided to suspend disbelief, and none more so than the New York Times.

‘The potential societal implications of ChatGPT are too big to fit into one column’, marvels the paper’s star technology columnist, Kevin Roose. Look out Google, he suggests. This AI chatbot could have an impact as great as the iPhone, or even greater: ‘Maybe this is, as some commenters have posited, the beginning of the end of all white-collar knowledge work, and a precursor to mass unemployment’, he adds.

But hold on a second. How can ChatGPT be both risibly stupid, and yet so good it makes humans redundant? The answer surely lies with the credulity of the witness – the willingness of reporters and pundits to bury any scepticism.

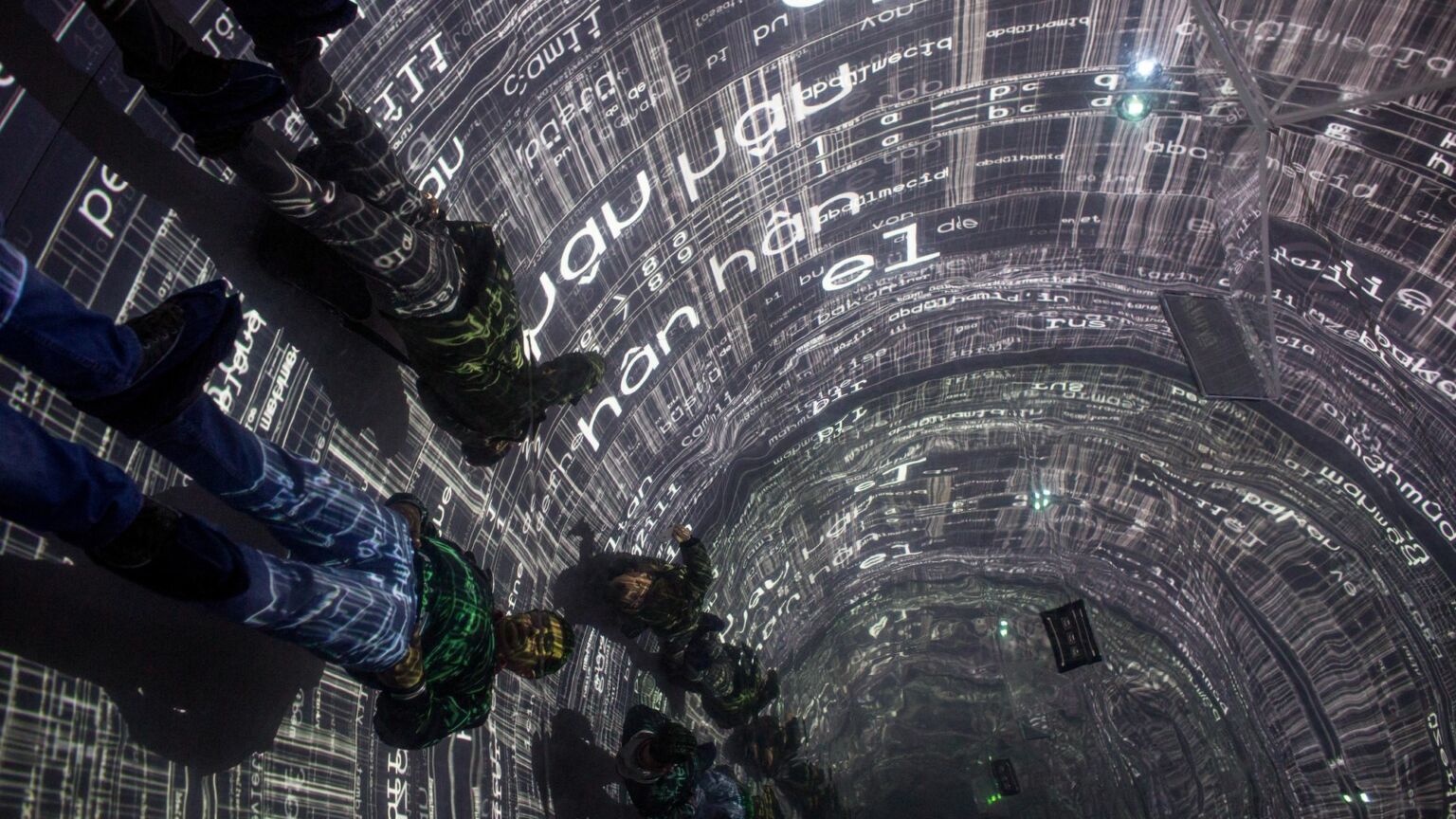

ChatGPT stands for Chat-based Generative Pre-trained Transformer. As the G suggests, the software is ‘generative’, meaning it pumps out a new text or image based on what it has ingested. Image generators captured the world’s attention last year with the launch of OpenAI’s DALL-E. New AI image generators are now flooding on to the internet. Google has released Phenaki and Imagen, which allow you to generate videos based on text instructions. Facebook owner Meta has released Make-A-Video, which may be the pick of the bunch. A generative portrait-creating app, Lensa, currently sits atop the Apple App Store’s most-downloaded charts.

Ask ChatGPT to turn out a poem, a sample of dramatic dialogue or a news report, and it will generate an uncanny likeness. It can also generate computer code. The sentences it creates may look syntactically correct and the poem may even rhyme. It is very good at churning out a dumb facsimile. This faithfulness to its source material can cause trouble, as Stack Overflow, a popular community Q&A site for programmers, has found. The site has temporarily banned users from sharing answers to coding problems generated by ChatGPT, after its forum was inundated by AI-generated text. ‘The primary problem is that while the answers which ChatGPT produces have a high rate of being incorrect, they typically look like they might be good and the answers are very easy to produce’, the site explained.

In fact, all ChatGPT is doing is creating a pastiche, based on vast data sets and millions of hours of training on huge computer-server farms. Having ingested almost every written word and digitised visual image created by humanity, it should be quite faithful by now. But it still can’t understand anything at all. It is dumber than a rock.

Facsimiles like this don’t usually fool us so easily, or we would all be taking stuffed dogs out for walks. So why are they fooling so many journalists and pundits? Clearly, we need a lot more more nuance when it comes to assessing the capabilities and value of such systems. But the self-mesmerised media seem unable to provide this.

As neuroscientist and author Gary Marcus, a long-time critic of AI, writes on Substack: ‘How come ChatGPT can seem so brilliant one minute and so breathtakingly dumb the next?’ Responding to Roose’s hagiographic Times article, Marcus lists all the things ChatGPT can’t do. This is far a more useful guide for the layman to the capabilities and limitations of AI than Roose’s article. It is ridiculous, Marcus tweeted, to call ChatGPT a ‘precursor to mass unemployment’ when it ‘struggles with maths, logic, reliability, and physical and psychological reasoning’. It also lacks spatial and temporal awareness. That is how ChatGPT can end up writing about cannibalism at the Tiananmen Square protests. (There was no cannibalism at the Tiananmen Square protests.)

This is not to say that machine learning is useless. Its indiscriminate nature – it doesn’t really know whether it is processing a picture or a text – can be very useful. A beautiful example is its ability to upscale old movie footage to HD or 4K resolution. The AI guesses what the glitches are very well, and fills in the gaps even more effectively. In some technical fields, machine learning is a useful tool in the software armoury. But it’s also an expensive tool that may not be more efficient at all than other better-established techniques.

As another AI critic, Filip Piekniewski, points out, we have been here before. ‘Breaking: people who believed radiologists and truck drivers will get replaced and Tesla will have a million robo-taxis by the end of 2020 now believe ChatGPT and stable diffusion are going to replace writers and creators’, he tweeted this week.

One can understand the hype for ChatGPT from the army of LinkedIn influencers and pundits whose quest for attention and followers requires them to hop from zeitgeist to zeitgeist. These poundshop futurists alight on stunts such as ChatGPT to burnish their own credentials as vanguardistas, positioned boldly at the forefront of trends. But journalists have a duty to be more sceptical. You can only really view ChatGPT as a society-transforming software if you completely discard any scepticism, omit its very obvious flaws and willingly suspend your disbelief. In other words, by becoming a participant at a magic show who is blind to the sleight of hand.

Religious undertones are never far away from today’s discussion of AI, and the Times’s Roose can’t resist donning the mantle of Biblical prophesier: ‘GPT-4, the next incarnation of the company’s large-language model, is rumored to be coming out sometime next year… We are not ready.’ Cue the drums. While a sensible sceptic hears the Benny Hill music, the media pundits hear the stentorian orchestral score to a disaster movie.

It’s worth recalling how in the Hans Christian Andersen folktale, it took a child to point out that the emperor was not wearing any clothes. The royal courtiers could not and today’s media courtiers are incapable of doing so either. In their willingness to become protagonists in this dramatic narrative of ever-advancing AI, they have surrendered their scepticism – which ought to be their most valuable tool.

Andrew Orlowski is a weekly columnist at the Telegraph. Visit his website here. Follow him on Twitter: @AndrewOrlowski.

Toby Young and Brendan O’Neill – live and in conversation

Monday 19 December – 7pm to 8pm GMT

This is a free event, exclusively for spiked supporters.

Picture by: Getty.

Who funds spiked? You do

We are funded by you. And in this era of cancel culture and advertiser boycotts, we rely on your donations more than ever. Seventy per cent of our revenue comes from our readers’ donations – the vast majority giving just £5 per month. If you make a regular donation – of £5 a month or £50 a year – you can become a and enjoy:

–Ad-free reading

–Exclusive events

–Access to our comments section

It’s the best way to keep spiked going – and growing. Thank you!

Comments

Want to join the conversation?

Only spiked supporters and patrons, who donate regularly to us, can comment on our articles.