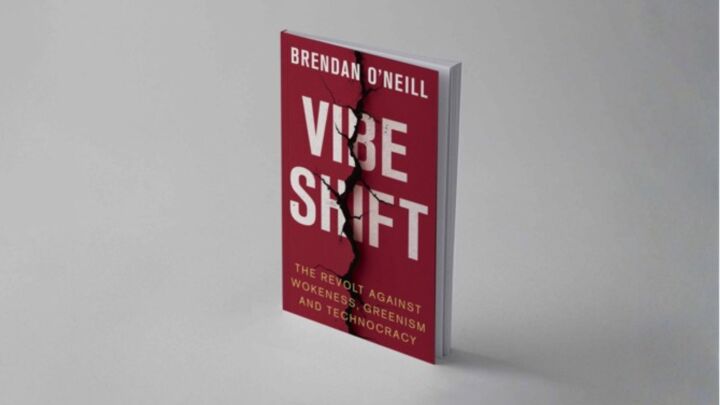

The cult that wants to give human rights to robots

Anthropic’s ‘Claude Constitution’ reflects the misanthropic, ultra-utilitarian Effective Altruism agenda.

Want unlimited, ad-free access? Become a spiked supporter.

After the artificial-intelligence company, Anthropic, put its in-house philosopher, Amanda Askell, up for a profile by the Wall Street Journal earlier this month, its media team must have thought things had gone quite well. Askell did not commit any howlers. Nothing she said went viral.

But the resulting fallout has revealed something that was previously kept well hidden: the influence and beliefs of the radical utilitarian movement that’s shaping our perceptions of AI – namely, Effective Altruism (EA). Thanks to the interview, the world now has a better idea of the radically misanthropic nature of the project Askell promotes. And to misquote Alan Partridge on Adolf Hitler, the more the world knows about EA, the less it’s going to like it.

The WSJ calls Scottish-born philosophy graduate Askell ‘the one woman Anthropic trusts to teach AI morals’. Founded in 2021 as a breakaway from OpenAI, Anthropic is behind the Claude family of large-language models. The company takes its mission very earnestly. Employing a philosopher (whose LinkedIn more modestly describes herself as a ‘member of the technical staff’) is intended to signal that it grapples with big ideas.

Askell was put up for an interview to promote robot rights, specifically, a new version of what Anthropic calls ‘Claude’s Constitution’, which she helped devise. This is a ‘a detailed description of our vision for Claude’s behaviour and values’. The newest version of the document, published in January, discusses Claude in terms previously reserved for humans – incorporating concepts like ‘virtue’, ‘psychological security’ and ‘ethical maturity’.

The latest iteration of the constitution asks that we respect chatbots as a new form of life. It even insists the law must be amended to take account of this new development. Anthropic not only imbues its statistical models with personality and behaviour, but also independent moral reasoning. The chatbots’ bill of rights is about giving Claude a sense of right and wrong, which is needed, Askell explains, because ‘they’ll inevitably form senses of self.’

Really? This is an exercise in anthropomorphism. As a next-word completion engine, a transformer model like Claude is no more capable of developing a sense of self than a door handle or a radiator.

As Luiza Jarovsky, who runs a popular newsletter on AI governance, puts it, ‘Claude cannot “genuinely care about the good outcome”, and it cannot “appreciate the importance” of anything”’, despite what Askell claims. ‘Claude’s constitution oozes harmful anthropomorphism from beginning to end’, Jarovsky says.

But should we be surprised that an AI firm is promoting such a nonsensical idea? Not when we examine the complex relationship between the consequentialist utilitarian project of Effective Altruism and the ‘high-fantasy cult’ of Anthropic.

Researcher Nirit Weiss-Blatt has uncovered what she calls the ‘AI existential-risk industrial complex’. She estimates that $1.5 billion has been spent by EA philanthropists on exploring the supposed threat of artificial superintelligence. While this is far short of the $25 billion spent globally on cancer research each year, cancer is at least real. AI superintelligence is entirely hypothetical – a technology that does not exist today, and may never exist.

Askell was born Amanda Hall in the late 1980s, raised in Prestwick in Ayrshire. She married a contemporary analytic philosopher at Oxford, William Crouch, who became a collaborator. They took the surname MacAskill. After their divorce, Crouch kept the surname, while Hall amended it, or Amanda-d it, to Askell.

MacAskill was instrumental in creating the philanthropic movement that applied savage utilitarian logic to charitable acts, agonising over how to maximise their impact. In 2009, the rationalist forum, Less Wrong, was founded to provide a space for rationalist utilitarians to discuss the world. MacAskill’s Centre for Effective Altruism, which followed two years later, gave the fledgling movement a name.

The logic of the Effective Altruists has taken them to some dark places, developing very peculiar beliefs that even Jeremy Bentham might find disturbing. Contributions by ‘animal liberation’ advocate Peter Singer, now described as a founding father of EA, played a large part in downgrading human concerns for those of other conscious creatures, with the utility-maximisers becoming curiously concerned with the welfare of shrimp. There are lots of shrimp, so minimising their suffering counts big, the logic goes.

Far darker is the idea that calculations of utility must include future humans – a proposition that flies under the innocent-sounding banner of ‘long-termism’. This has led Less Wrong founder Eliezer Yudkowsky – the founding father of AI doom-mongering, whose interest in it predates EA by several years – to call for precautionary airstrikes against data centres and chip factories. For living humans, the deck is always stacked against us: there will always be more future humans than those currently living today. After her Wall Street Journal profile appeared, a piece Askell wrote in 2015 resurfaced, in which she called for the culling of apex predators. Critics point out that, in EA logic, the apex predator is human beings.

Even stranger is what EAs call the ‘infinitarian’ problem. The universe could be infinite, transhumanist Nick Bostrom has proposed, and there may be many worlds or multiverses. So we must consider the consequences of our actions in those hypothetical worlds, too.

All the while, the needs, concerns and values of humans who are alive today are downgraded and ignored by Effective Altruism. It is a post-Christian, post-socialist and post-humanist philosophy – a radical break with the past.

All the more disturbing is that these utilitarians now make regular policy interventions, via new EA-funded initiatives that adopt the language of ‘growth’ and ‘progress’. The ‘Progress Studies’ project was developed to advance EA ideas and utilitarianism. but without the stigma of its nuttier fringes. We were not supposed to join the dots, economist Tyler Cowen admitted in a podcast last year. Their project ‘would never be such a formal thing or controlled or managed or directed by a small group of people or trademarked’, Cowen said. ‘It would be people doing things in a very decentralised way that would reflect a general change of ethos and vibe.’

Where does that leave us today? The connections between Anthropic and EA are so deep it’s tempting to think of the company as an EA vehicle that just happens to also make AI models. The co-founding siblings, Dario and Daniela Amodei, are early EA advocates. Daniela once worked for Stripe, the biggest supporter of the ‘Progress Studies’ project. Most of Anthropic’s initial funding came from EAs, too. Anthropic’s ‘constitution’ embeds EA principles – and note how its title mimics the governmental formalities of a nation state or the UN. Dario Amodei’s husband, Holden Karnofsky, founded the giant EA grant-giving organisation Open Philanthropy (now Coefficient Giving).

The danger, as religious scholar Isaac May has pointed out, is that ‘having any community with such authority over the future of technology is perilous’. Jarovsky and others regard ‘Claude’s Constitution’ as a political exercise, one that is ultimately self-serving. ‘By replacing human feedback with AI self-critique during training, Anthropic removes elements that could provide democratic legitimacy’, writes one think-tank. Or as Jarovsky writes: ‘The company is advancing new, unpopular, and legally questionable theories of AI personality to support a parallel, weaker accountability framework for AI companies… Their goal seems to be to create a higher-status, exceptionalist system for AI models and AI companies.’

Anthropic is guilty not only of wildly exaggerating the capabilities of AI, and promoting dystopian fantasies of ‘AI doom’, but also quietly advocating the deeply misanthropic philosophy on which it was founded. ‘Claude’s constitution is an unfortunate development in AI governance that minimises human values, rules, and rights’, Jarovsky says.

But knowing what we know about Effective Altruism, perhaps that isn’t a defect, but the main feature of the product.

Andrew Orlowski is a weekly columnist at the Telegraph. Visit his website here. Follow him on X: @AndrewOrlowski.

You’ve hit your monthly free article limit.

Support spiked and get unlimited access.

Support spiked and get unlimited access

spiked is funded by readers like you. Only 0.1% of regular readers currently support us. If just 1% did, we could grow our team and step up the fight for free speech and democracy.

Become a spiked supporter and enjoy unlimited, ad-free access, bonus content and exclusive events – while helping to keep independent journalism alive.

Monthly support makes the biggest difference. Thank you.

Comments

Want to join the conversation?

Only spiked supporters and patrons, who donate regularly to us, can comment on our articles.