The myth of ‘artificial intelligence’

AI has consistently failed to deliver results – so why do our elites have so much faith in it?

Want to read spiked ad-free? Become a spiked supporter.

In his superb book, Dominion, historian Tom Holland finds parallels between the early Christians and today’s judgemental theorists of gender and race. Both can be called ‘social-justice warriors’, he notes. Each sees a judgement day close at hand, and each has zealots who relish their role as judge, jury and hangman. Wokeness is just one modern mania that has a distinctly religious quality. Arguably, there are two other modern religions that eclipse wokeness in their scope and ambition: environmentalism and ‘artificial intelligence’ (AI).

Environmentalism expresses a desire to subordinate human development and welfare to a new, all-encompassing mission – that of reducing the atmospheric concentration of carbon dioxide. An ’emergency’ or a ‘crisis’ has been declared by activists, one which supposedly requires the suspension of political and moral norms. Every aspect of our lives is recast into this new moral framework.

Karl Marx recognised how religion gives society its shape and moral order. He called religion ‘the general theory of this world, its encyclopaedic compendium, its logic in popular form, its spiritual point d’honneur, its enthusiasm, its moral sanction, its solemn complement, and its universal basis of consolation and justification’. But Marx also recognised religion’s devotion to the idea that human beings are exceptional and unique: ‘It is the fantastic realisation of the human essence.’ Religion is a form of fetishised or estranged humanism, Marx was saying.

Environmentalism turns this celebration of humanity on its head. Human activities are measured by the ‘harm’ or ‘impacts’ they cause to the natural order, and all human activity is therefore sinful. We ate the forbidden fruit by burning fossil fuels and by daring to increase human welfare – and now we must pay. Even the UK prime minister signals his support for this philosophical belief when he describes the Industrial Revolution as a derangement of nature, or a ‘doomsday machine’.

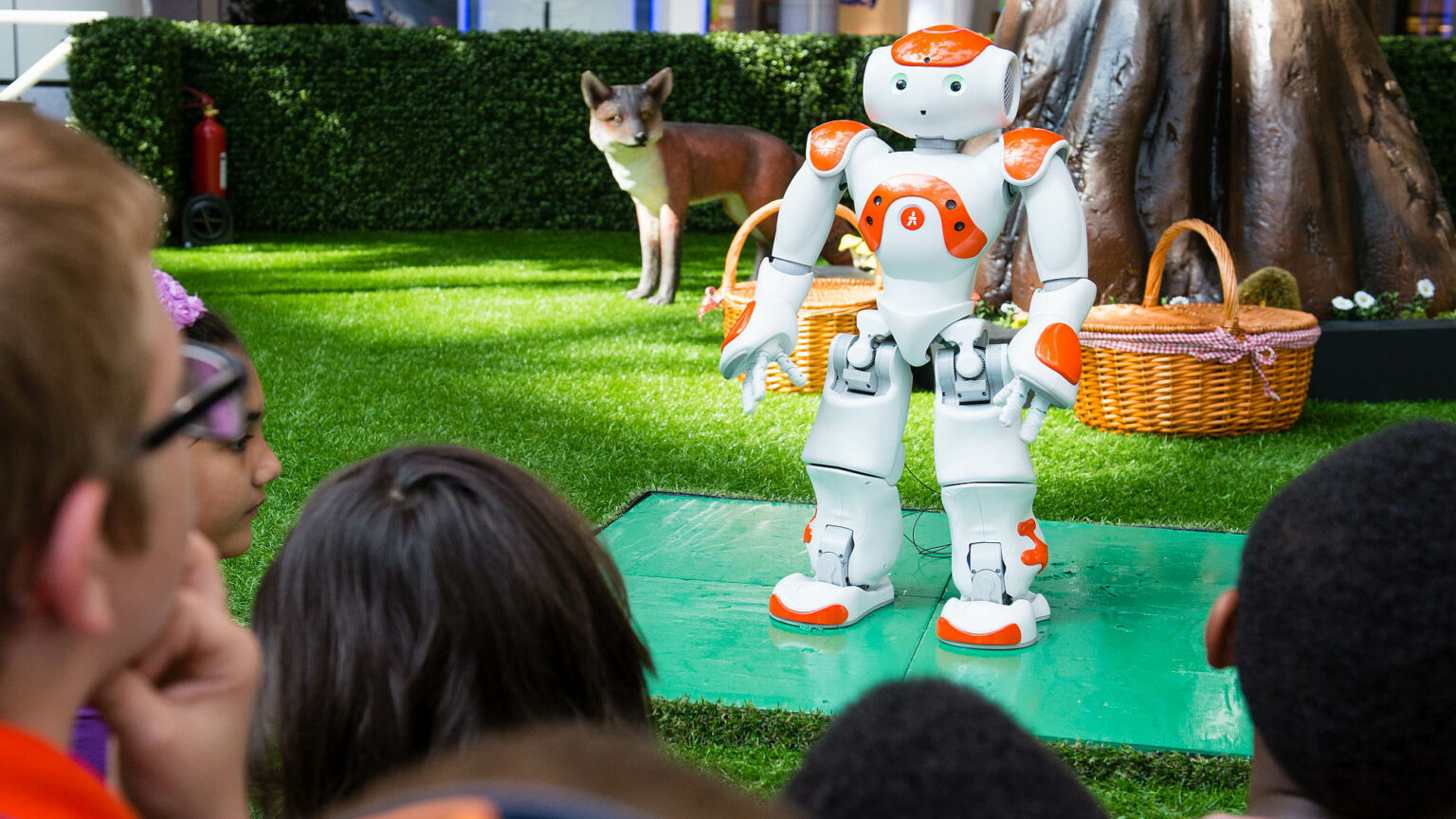

Equally religious, and equally anti-human, is the current infatuation with AI. We are currently in the third wave of enthusiasm for AI in 65 years, during which periods of high hopes and investment in AI have been followed by periods of derision. This time, however, belief in the transformative power of AI has penetrated the policy, media and administrative classes as thoroughly as the belief in apocalyptic climate change.

Today’s AI develops an idea that has been around from the start. It uses multi-layered neural networks which calculate probabilities to find statistical regularities – or patterns.

The field is rife with anthropomorphic metaphors: AI is undergoing ‘training’, for example, or ‘deep learning’. But these terms are really misdirections, for the software has acquired no knowledge or understanding of the underlying data it is processing. Instead, the software has bludgeoned its way through a task using brute force – producing a statistical approximation to achieve a result.

A better name for the various activities currently undertaken by AI may be ‘heuristic software’. But then this might remind us that it’s guesswork, and that things can go wrong. Sometimes this guesswork can be impressive. At other times it is sufficient to be useful. Often it is not, and AI’s ignorance of the real world can be painful, and hilarious.

But companies selling AI software or services claim a great deal more on AI’s behalf. ‘AI is one of the most important things humanity is working on’, insists Sundar Pichai, CEO of Alphabet, Google’s parent company. ‘It is more profound than, I dunno, electricity or fire’, he even claimed last year.

Our political elites accept such claims at face value, because it allows them to indulge in a little vanity. They can imagine themselves taking their place alongside the boffins, as visionaries or vanguardistas, as the future sweeps in. Five years ago, I was one of over 200 people – and only three from the professional media – invited to give oral evidence to a House of Lords inquiry into artificial intelligence. In advance, we were given nine points on which the lords might wish to hear our views. One of these was how we would prepare the population for the sweeping changes that were to come from new developments in AI. Apparently, as journalists we were not expected to question such improbable claims. It was taken as a given that AI would soon be a smashing success.

Five years on, the hype has reached new levels of absurdity, with artistic pastiches of models, like Open AI’s GPT-3 language generator, being mistaken for human-like sentience.

The political class was promised a ‘fourth industrial revolution’, but AI is conspicuously failing to deliver tangible practical results. Yes, it is becoming another useful tool in the data-analytics toolbox. But it has failed to make an impact on other key areas, such as robotics, just as sceptical robotics scientists predicted.

Not one radiologist has been made redundant by AI, the neuroscientist and author Gary Marcus pointed out recently. Marcus has argued for some time that the current approach to AI has hit a wall, and is proving to have very little use outside the IT industry. AI remains extremely crude and dumb. For his pains, he finds himself in the same boat as so-called climate deniers. And with uncanny echoes of Climategate, the AI priesthood even refuses to allow researchers like Marcus to view or test the models themselves, in case they find something wrong with them. Nevertheless, the stunts – and AI is a faith that requires regular ‘miracles’ – get ever more spectacular.

In fact, invoking religion or magic when flogging AI is not new. The original term was a triumph of marketing. A young professor called John McCarthy, who co-edited an obscure academic journal called the Journal of Automata Studies, decided that this new branch of mathematics could use some pizazz. Automata weren’t sexy enough. ‘I invented it when we were trying to get money for a summer study’, McCarthy would later admit.

The appeal of being God, of ‘artificially giving birth’, was something Professor Sir James Lighthill identified as one of AI’s promises. Lighthill undertook the review that cancelled most of the funding for AI in 1973. Today, DeepMind – the AI subsidiary of Alphabet – is a master at evoking ‘unexpected’ or ‘creative’ outcomes supposedly produced by its deep-learning applications, which critics refer to as ‘It’s alive!’ moments. These tricks work spectacularly well with journalists, who are only too willing to suspend their scepticism. Such credulity is abundant, for example, in a long cover feature in The Economist this month, which marvels at the ‘emergent properties’ of an AI that ‘border on the uncanny’.

Throughout those first and second AI summers, religious claims were never far away. During the second revival of AI in the 1980s, philosopher Mary Midgley lamented how dreary and familiar all the great claims about AI sounded to her.

‘They promise the human race a comprehensive miracle, a private providence, a mysterious saviour, a deliverer, a heaven, a guarantee of an endless happy future for the blessed who will put their faith in science and devoutly submit to it’, she wrote in a review of a 1984 book by Professor Donald Michie, one of the leading British AI academics (Michie led one of the few departments to survive the 1970s AI winter). ‘Is it clear why I was reminded of hymn books?’, asked Midgley. Michie exhibited a ‘crude indiscriminating euphoria’, she wrote, and there is no better description of his successors 50 years later – they too have a liturgical quality.

What AI shares with radical environmentalism is a longing to create an external moral arbiter. With apocalyptic climate change, the planet is judging us because we dared improve our lot. In AI’s Jesuit wing – transhumanism – man hasn’t fallen, we were just awful all along. Among transhumanists, there is a revulsion toward the physical body, which decays and defines a fixed form, and also a revulsion at what is characterised as our hopeless irrationality. We have always been inferior to the machines, they argue, but those machines just hadn’t been invented yet. By submitting to the machines, we become free, as Grimes’ 2018 single, ‘We Appreciate Power’, articulates:

‘People like to say that we’re insane

But AI will reward us when it reigns

Pledge allegiance to the world’s most powerful computer

Simulation: it’s the future.’

Here the religious overtones are explicit – immortality is achieved by digitising the physical and uploading it. The deeply misanthropic idea that humans are not unique, and are in fact a bit rubbish, is not a new invention of the AI evangelists, of course. It has become commonplace in fields such as neuroscience and cognitive science to argue that consciousness is a trick of the mind, that the subjective self is an illusion or a trick of the brain circuitry. Cognitive scientist and philosopher Daniel Dennett was making this case three decades ago. A parallel, materialist view is even older: the proposition that we’re just poorly functioning machinery was expressed by Richard Dawkins in his 1976 bestseller, The Selfish Gene, where he wrote: ‘You, dear human, are simply a gigantic lumbering robot.’

In the early 2000s, computer pioneer and technology critic Jaron Lanier recognised these two beliefs as two cheeks of the same backside – a backside he called ‘cybernetic totalism’. He was dismayed that so many highly intelligent friends of his in science and technology were sympathetic to this collection of prejudices, in part or in whole. Of the six characteristics he identified of this worldview, one was that ‘subjective experience either doesn’t exist, or is unimportant because it is some sort of ambient or peripheral effect’. Subjectivity has long been unfashionable among the intelligentsia, as James Heartfield identified in The ‘Death of the Subject’ Explained in 2002. Twentieth-century literary fashions like structuralism, cognitive science and more recently behavioural science merely added some intellectual respectability to these prejudices.

Two decades ago, Lanier already had an explanation for the supposedly ‘magical’ and ‘emergent’ properties of today’s AI. ‘To make the computers look smart, we have to make ourselves stupid’, he observed. It requires a curious act of self-abasement. Unfortunately, abasing ourselves is a habit to which our elites seem strangely addicted. Hollowing out what it means to be human has cleared the path for both artificial intelligence and apocalyptic environmentalism, two of the most powerful religions of the 21st century.

Andrew Orlowski is a weekly columnist at the Daily Telegraph. Follow him on Twitter: @AndrewOrlowski.

Picture by: Getty.

Who funds spiked? You do

We are funded by you. And in this era of cancel culture and advertiser boycotts, we rely on your donations more than ever. Seventy per cent of our revenue comes from our readers’ donations – the vast majority giving just £5 per month. If you make a regular donation – of £5 a month or £50 a year – you can become a and enjoy:

–Ad-free reading

–Exclusive events

–Access to our comments section

It’s the best way to keep spiked going – and growing. Thank you!

Comments

Want to join the conversation?

Only spiked supporters and patrons, who donate regularly to us, can comment on our articles.