Long-read

The ideal of authenticity

Charles Taylor explores the making and conflicts of the modern self.

Want to read spiked ad-free? Become a spiked supporter.

Phrases such as self-fulfilment, self-actualisation and, perhaps most easily loathed of all, self-help, have all served as lightning rods for critics of the modern world. They appear to capture all that is navel-gazing and narcissistic about society today, and serve to indict us for our shallow, self-obsessed individualism. But Charles Taylor, philosopher and candidate in three Canadian federal elections, is different. In works such as Sources of the Self: The Making of Modern Identity (1989), The Ethics of Authenticity (1992) and A Secular Age (2007), this former student of Isaiah Berlin and renowned interpreter of Hegel, refuses to indulge in cultural pessimism.

Yes, as he himself writes, there is much that is shallow and narrow about forms of modern selfhood. Relationships are revocable, ties don’t bind, deracination does rule. But he sees these forms of individualism as deviant and debased forms of the ‘ideal of authenticity’ – the conviction, that is, that an individual, above all, ought to be true to one’s self. As the spiked review discovered, Taylor seeks to rescue this ideal, a crowning achievement of modernity, from its moralistic knockers just as much as its narcissistic debasers. Taylor also talked about identity and the need ‘to see what is great in the culture of modernity, as well as what is shallow or dangerous’:

spiked review: You have written that in the pre-modern era people didn’t speak of ‘identity’ and ‘recognition’ because these were unproblematic and didn’t need to be thematised as such. What changed with the advent of modernity? Why did ‘identity’ and ‘recognition’ become problematic?

Charles Taylor: The change in the meaning of the word ‘identity’ – people now talk of ‘my identity’, ‘your identity’, of ‘respecting identity’ and so on – is very interesting. I think it’s something to do with what I define as the ethic of authenticity, which is widely taken up during the Romantic period. It’s the idea that everyone has their own way of being human. The ethical part is that you’re meant to live up to that way of being human, of being yourself, and not be taken over by conformism, or some other alien way of living given to you by your parents or your society.

But if you look at the period in which the ethic of authenticity originates, it emerges hand in hand with the idea, articulated by Johann Gottfried Herder at the turn of the 19th century, that we’re all different, we all have different capacities, different potentialities, and we have to work them out and find some expression for them. We have this sense that this is what we’re about, this is what we should do, and we try to find a way to define it. And that’s why recognition plays such an important role, because we never work these things out by ourselves. We always work them out in dialogue, exchange and struggle, first of all with our parents, then with wider society. So there can be a situation in which your primary intuitions get blocked, your parents don’t understand you, or the people around you don’t understand what you’re talking about. They think you’re ridiculous, dangerous. And that can really block the development of your self.

Now contrast this situation with, for example – and these are ideal types – a pre-modern hierarchical society in which we all have different paths, but the paths, the scripts, are written by society. A man is a knight, or a king, or a page, and a woman is a lady, or a peasant’s wife. The script is already written by your social situation. So the only question is whether you succeed in that role, whether you fulfil that script. If you’re a knight, and you’re a terrible coward, or you can’t bear the sight of blood, you’re not going to succeed. And there the recognition of others plays another kind of role, of classing you as a success or failure.

But in the modern period, which eventually produces the ethic of authenticity, not only are we getting rid of these scripted, caste distinctions, what I’m supposed to be, and my difference from you, has to be worked out. That’s why the word identity becomes so important, as it articulates a sense of what’s really important in my life, a standard which I’m trying to live up to. Although that goal/standard certainly contains universal features, it is something specific to me. Which is why there’s such a tremendous emphasis on recognition, on having that sense of self recognised.

Non-recognition can even be seen as a way of blocking my self-development. That is, my difference is not being recognised. Think of the gay movement, for example. It’s very interesting how it mutates in the middle of the 20th century. In the 1920s, you get someone like André Gide, who comes out of the closet because he thinks it’s awful that people have to suffer and hide their preferences. When you get to Stonewall in the latter half of the 20th century, there’s another way of framing it – here’s an identity, which is as good as any other, and it’s being treated differently. It’s that framing of discrimination in terms of identity that gives especial energy to the gay-liberation movement. And it also gives tremendous energy to the push for gay marriage later on. So discrimination framed in terms of identity and sense of self provided the gay movement with the energy to win a series of victories in a relatively short space of time

review: Could you say a little bit more about the main intellectual and moral sources of modern selfhood? In the ideas of self-mastery and self-knowledge, for instance, there’s the legacy of 17th and 18th century rationalism. But, paradoxically, there’s also a strong counter-rationalist Romantic current, too. Is the modern idea of selfhood the product of clashing intellectual tendencies?

Taylor: This is something I try to explain in Sources of the Self, namely that there’s a really big fight going on within ourselves. We are solicited by very different dimensions of selfhood. When it comes to realising the self, on the one hand, you have the instrumental self, who ought to be free to pursue his goals, provided that doesn’t impinge on, or create injustice for, others. And, on the other hand, you have an expressive dimension of the self, which is concerned with the ethic of authenticity, which in turn renders the issue of aesthetic expression tremendously important. And what you have is a battle going on, both a tension in each one of us, and a fight between tendencies in the external, political world. So some say that what is really important is the freedom to pursue our own interests, neoliberalism, and let’s not have any of this nonsense about beauty or destroying the environment and so on. And, on the other hand, you have people who are taken with the other tendency, who are concerned with expression of the self, with non-instrumentality. So it’s a battle that is within each us, but one in which we also take sides – it is one of the constituent battles of our culture and civilisation.

review: You mention the importance of aesthetic expression, and Nietzsche even argued that we should make ourselves works of art – why has the aesthetic been so central to modern ideas of selfhood?

Taylor: You can see how there’s a very deep interweaving, coming from the Romantic period, of the ethic of authenticity on the one hand, and the elevation of the aesthetic on the other. So when people began to work out the ideal of authenticity at the end of the 18th century, and the beginning of the 19th century, there was also a corresponding emphasis on originality in art. This was new. If you go back far enough, when people made religious icons, they were craftsmen. They had no sense of their work being original or even that it would be good if it was original. Their products weren’t supposed to be original; they were supposed to be artefacts. And this has been totally overturned during the past two centuries. We now admire art, or music, or poetry not for its craftsmanship – the extent to which it realises a template – but for its originality. So you can see the interweaving of the aesthetic with the ethic of authenticity. The seeds were there from the very beginning.

So it’s possible, if you look at ethics in the broad sense of what the good life is about, then people can say that the good life is really about this superior self-expression, of being original, rather than about what you might call morality, which is doing the right thing by other people. Indeed, for someone like Nietzsche, morality, doing right by others, gets in the way of what he sees as the real substance of the good life, which is self-realisation, becoming an Übermensch and so on. I think you can see that that’s part of the whole turn in modern culture, which promotes the aesthetic to the highest possible position.

review: Individualism tends to get a bad press today. It’s associated with selfishness, shallowness and, to a greater extent than it was even in Christopher Lasch’s time, with narcissism – think, for instance, of the torrent of critical commentaries on ‘the selfie’. Yet what’s fascinating about your work is the willingness to grasp modernity and, therefore, the rise of individualism, in terms of, as you put it, its grandeur as well as its misère. Could you say in what its grandeur lies, and in what its misère lies?

Taylor: You can understand why individualism is associated with selfishness etc, because very often the idea that I’ve got my own way of being, and I have to work it out, can be captured by highly inauthentic, general ideas circulating, such as the attempt to be oneself by being like a famous popstar. That’s a trivial way in which it can work itself out.

But it can also work out in such a way that justifies a great deal of irresponsibility. It’s interesting that the ethic of authenticity gets woven into the postwar period with the consumer society, a society in which we take for granted that the majority of people either now or in the near future will not, in their economic life, be primarily concerned with mere necessities, but with optional or discretionary spending. And into that situation comes the struggle of different corporations to sell their product, and they do so, to a tremendous degree now, in terms of ‘style’. Nike says ‘Just Do It’, and you think you are being brave in affirming your particular style in life by buying a pair of running shoes. So you can see that there is a tremendous trivialisation of the ethic of authenticity going on. At one end of the spectrum, you have great poets, like Keats and so on, who struggled against the immense pressure of their society to realise something really valuable. And at the other end, you get Nike shoes. So it’s quite understandable that a lot of people, from Allan Bloom in The Closing of the American Mind to Lasch in The Culture of Narcissism, defined the culture of authenticity in terms of the trivialised end of the spectrum.

But that’s not the way it actually is. Like any ethic in history, it has its noble and ignoble forms. Take the ethic of self-sacrifice in the military. It can take the form of a lot of young people sitting in uniforms at their home base posturing, ‘we’re warriors, we’re soldiers’, just as it can take the form of people willing to give their lives for their country. Or the ethic of sainthood, for example, which can become a tremendously conformist, rule-following, crabbed form of Christianity. You can’t name an ethic that doesn’t have debased forms, and sometimes there are a lot more examples of the debased form than of the higher form.

If you examine any of the ethics concerned, and look at what it is to live by them, you can see right away that a particular ethic is not something you can cement on your own. It’s worked out between you and others, and it can have very serious modes of searching for a very important and original part of one’s identity. The ethic of authenticity needs to be seen in that light. Anyway, you couldn’t turn the clock back. Not only are you suppressing some good things by trying to go backwards, it’s also futile.

review: Many thinkers and writers have basked in the ‘God is dead’ – updated as the ‘end of metanarratives’ – story of modernity: namely, that there is no grand purpose or end to life, from religious notions of redemption to secular tales of progress and communism, in light of which one’s existence makes sense. Yet in your work you insist that the modern self still relies on what you call a ‘horizon of significance’, that is a framework through which one makes sense and finds meaning in the world. Could you explain why this remains the case?

Taylor: I have very little sympathy for the the God-is-dead, end-of-metanarratives idea. It’s a pragmatic contradiction. What people like Jean-François Lyotard assert is something like: ‘Up to a certain date, we were all into grand narratives, then we saw the light, and we left them behind.’ Well, that itself is a metanarrative. It’s a pragmatic contradiction which is unsustainable, because we all live our lives narratively. We see ourselves as either going towards, or going away from where we want to be, or we may change our views on what we want to be, and then we’re back on the right track, whereas before we were on the wrong track. This is how we read ourselves, both as individuals and as societies and civilisations. And I don’t see how you can get away from that unless you have absolutely no narrative dimension at all to your self-understanding. The mind boggles at what that would be like.

review: Why do you think this idea of one’s life as a story is so integral to the formulation of modern selfhood? And why can this story not be told without, as you put it elsewhere, an orientation to the good?

Taylor: This is where the contribution of the psychologist Erik Erikson is very important, because in the 1960s he introduced the term identity in the context of his idea of ‘identity crisis’. He argued that one’s identity is what is fundamentally important to that individual. It orients him or her because it provides the context in which I can make a whole range of other decisions which flow from that. An identity crisis is where there is some kind of breakdown, a deep conflict in the sense of what is really important to me, or even the desertion of a sense of what is really important to me. The people Erikson worked with when formulating his idea of identity crisis were the children of a Sioux tribe in the US. They were more or less assimilated, and they went to mainstream schools. But they had this identity conflict because their aboriginal self-understanding really had no place, or was even understood, in the schooling system.

There are other cases in which identity crises manifest themselves. Take adolescents, for example, who suddenly find that all the things they were living by and accepting and so on, breakdown. Those things – values, beliefs etc – don’t speak to them anymore.

What Erikson showed was that an identity crisis isn’t just a case of I used to think this, and now I think that – it is in fact deeply disturbing. It almost stops you living, because you don’t know what it is to live. And that shows that our identity is inseparable from what we think is really important, what is right and good, so that identities always incorporate a sense of a better way of living, or a good way of living. You can’t define an identity without entering into that space of defining the better and the worse.

I should add that I don’t think it’s only modern selfhood that’s concerned with the story of one’s life. In the pre-modern period, say my position is that of a young aristocrat, so I have to be a knight or a soldier or something. There’s a story here: a story of my struggling to fulfil that role. Now it’s a different kind of story, with a script that I carry out or fail to carry out, to the story of realising myself. They’re different kinds of story, but they’re both stories. It’s difficult to imagine what life would like without that story.

review: What do you make of the increasingly scientistic understanding of the self, in that it is reduced to just one object (neuroscientific or otherwise) among others?

Taylor: The scientistic understanding – promoted by the likes of Daniel Dennett, Steven Pinker and so on – where we’re conceived as thinking beings, with brains that can be understood like a computer, as the hardware (although they’re soft). Now the concepts of computer programmes and complex algorithms don’t engage at all with the concepts I find myself using as I try to work out my identity, its relation to you and your gaze on me, issues of the good, of the less good – a language that’s highly dependent on being the right interpretation of what I’m feeling, and what I’m experiencing. There’s a total gap between these two languages, and nobody has any clue how you can move from one to the other.

The relationship here is like the relationship between our ordinary talk about temperature – this is warm, this is hot – and talk about the kinetic energy of molecules. That’s the classic example of a reductive explanation. No one has the foggiest idea as to how to bridge the gap between the scientific conceptualisation of the brain and our ordinary sense of selfhood. They pretend to, of course, because they have a picture of a brain that makes it look like a computer. But what in that neuroscientific interpretation relates to my account that, say, this is a horrible way to be, or that I’m deeply alienated, or this is really fulfilling, or this is really admirable? There’s just no connection there. Now I’m not saying it could never happen or that it’s untrue, but the challenge is to produce this kind of ‘bridge’ language, but we don’t even have an idea as to what that would look like. That’s a big promissory note.

Besides, one can’t imagine doing without the language of self-explanation, self-understanding and self-searching, because that’s the language we use for the activity of seeing who we are, what we want and what’s really important. We will never be able to get rid of that language, just like we can’t get away from the language of hot and cold. Perhaps by the 25th century, we will view ourselves entirely in terms of brain science. But it seems to me wildly implausible. You can’t say ‘it will never fly, Orville’, because you have to give science a chance. But it does seem highly unlikely.

review: And, finally, the idea of self-determination – which underpins the development of modern democracy – is increasingly called into question today. One thinks, for example, of the popularity of behavioural economics (so-called nudge theory), and the ‘soft despotism’ and paternalism of Western governments. What do you make of this development?

Taylor: There’s something in the nature of modern democracy which always makes it problematic. If you go back to the ancient idea of democracy, it has a slightly different meaning. It meant rule by the demos. But if you think of modern democracy, you think of rule by everyone, the whole people, right? But the word ‘people’ is ambiguous today. Sometimes, it means the non-elite people, as opposed to the guys on top, hence formulations like ‘they’re not listening to the people’. And then, in another sense of the word, we say democracy is rule by the people, and by that we mean everyone. The Ancient Greeks didn’t have this idea of rule by everybody, because the idea of who was ruling was very clear through face-to-face contact. It was either the whole assembly deciding on a matter, or a much narrower group mainly emanating from the aristocracy and the rich, and there was no problem as to who was calling the shots. Modern democracy is very complex because it refers to very large societies and makes massive use of various forms of representation and representative institutions, because otherwise you couldn’t even begin to imagine what ‘the people call the shots’ would mean. So it’s always open to the questions: aren’t these complex institutions meant to represent the will of the people, and is anything like that happening? Are they being captured at various points by bureaucrats, by lobbyists, by the rich? Modern democracy always potentially invites deep suspicion.

What makes a difference, I think, is that people have the sense that, yes, there is something like democracy going on when, in some way, the distance between whoever the directly ruling people are – ministers, lobbyists, paymasters – and the mass of people is somehow lessened, because somehow money is not running the whole show, we have real popular movements that are having an impact, and so on. And in modern Western democracy, I think you can see a series of epochs when things were moving towards something that looks like the people ruling, and other periods when we’re moving away from this ideal.

So, after the Second World War, with the development of the welfare state and various forms of universal provision, and the existence of powerful trade unions who could fight against employers etc, there was a sense that we’re moving ahead. And that was partly because the great inequalities between rich and poor had gotten less, and much less in the US. Between, say, 1900 and 1950, there had been a real compression of what in the robber-baron days were just astronomical inequalities. After about 1975, in the US and the West in general, it moves the other way. And one of the measures of this is the spectacular growth in inequality. Correspondingly, money seems to talk a lot more loudly. So there’s a sense in which the suspicion that these institutions don’t represent the rule of the people grows. And consequently, more and more people don’t vote, which means that it is increasingly true that these institutions really don’t represent the people. So you get a spiralling towards what could be a believable democracy between 1945 and 1975 and a spiralling away ever since. I think that if we ever reverse the growing inequality, we’ll get a sense that we’re on the road back again.

But I think the thing about modern democracy is that at best you are only on the road to, rather than at, the point at which the people really rules.

The popularity of behavioural economics among today’s rulers must be understood within the context of the imperfections of modern democracy. And behavioural economics is highly manipulative; Cass Sunstein’s nudge theory is highly manipulative. Of course, you might argue that since we’re never going to have an absolutely perfect expression of the popular will, plus the fact that the popular will is probably shot through with irrationality, let’s try to cope with that by being the smart ones manipulating ‘them’, but manipulating them in a benign way because it’s for their own good. That’s a whole understanding of democracy which I call Schumpeterian, which comes from Joseph Schumpeter, which says democracy just consists in, every four or five years, everyone having a vote and throwing the bastards out if you want to, and then, after the election, we go back to the real situation where there’s one gang up there really running the show and the only power the people wield is that the gang up there is looking over its collective shoulder worrying whether it’s going to get elected in a few years’ time. That’s a conception of democracy that gives up on the strong idea of popular rule.

Charles Taylor is a philosopher and professor emeritus at McGill University. He is the author of many books, including: Hegel (1975); Sources of the Self: The Making of Modern Identity (1989); Multiculturalism: Examining the Politics of Recognition (1994); and A Secular Age (2007).

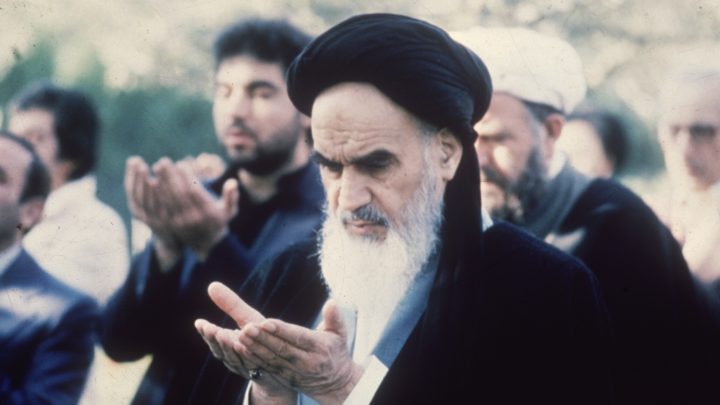

Picture by: Michael Craig, and published under a creative commons license.

Who funds spiked? You do

We are funded by you. And in this era of cancel culture and advertiser boycotts, we rely on your donations more than ever. Seventy per cent of our revenue comes from our readers’ donations – the vast majority giving just £5 per month. If you make a regular donation – of £5 a month or £50 a year – you can become a and enjoy:

–Ad-free reading

–Exclusive events

–Access to our comments section

It’s the best way to keep spiked going – and growing. Thank you!

Comments

Want to join the conversation?

Only spiked supporters and patrons, who donate regularly to us, can comment on our articles.