Epidemic of fear

We were scared to death long before 11 September.

Want to read spiked ad-free? Become a spiked supporter.

Many claim that 11 September ‘changed the world forever’, particularly impacting on public perceptions of risk and creating a sense that we live in an ever-more risky world. But it is wrong to blame today’s culture of fear on the collapse of the World Trade Centre. Long before 11 September, public panics were widespread – on everything from GM crops to mobile phones, from global warming to foot-and-mouth.

One of the key points I make in my book Culture of Fear is that perceptions of risk, ideas about safety and controversies over health, the environment and technology have little to do with science or empirical evidence. Rather, they are shaped by cultural assumptions about human vulnerability. (A revised version of Culture of Fear has been published to take into account 11 September – see below.)

It was my experience of the 1995 contraceptive Pill panic that motivated me to write Culture of Fear. I carried out a global study of national reactions to the panic, and it quickly became clear that the differential responses were culturally informed. Some societies, like Britain and Germany, responded in a confused, panic-like fashion – while countries like France, Belgium and Hong Kong adopted a more calm and measured approach.

Seven years later, British culture has become even more uncomfortable with managing change and dealing with risks. Paradoxically, there is currently a new episode in the Pill panic – with Pill manufacturers in the UK facing a court action organised by lawyers who are relying on the kind of dubious claims that provoked the panic in the first place (1). In early March 2002, newspapers ran headlines like ‘Is the Pill killing us?’ and ‘Killer Pill’. For women who read about the ‘disastrous injuries’ and deaths suffered by the victims of callous Pill manufacturers, contraception must seem like dangerous territory. Even though the officials responsible for precipitating this panic have since admitted that the disputed Pills are safe, the general impression created is that people have been kept in the dark about ‘disastrous side effects’.

But it would be wrong to see this most recent act in the Pill panic drama as simply a continuation of past controversies. The balance has shifted even more in favour of emotionalism, suspicion and blame – and scientists, researchers and Pill manufacturers sound more defensive than before. Why? Because they intuitively grasp that when it comes to public debate the power of emotion triumphs over the cold facts of science (2). A distraught parent clutching a picture of her daughter lost to the ‘killer Pill’ will always appear more genuine than an expert mumbling about the facts.

In 1996, the insurance company Swiss Re (3) published a report by Christian Brauner called Electrosmog: A Phantom Risk (electrosmog is the popular name for the non-ionising radiation emitted by mobile phone antennas, which some claim is a health risk). Brauner predicted that there would be a huge rise in the number of insurance claims related to the alleged health risks of using a mobile – but he argued that the claims would have little to do with the incalculably small health risks of using a mobile, and more to do with the incalculably great risk of socio-political change. The report feared that changing social values could result in scientific findings being evaluated differently in the future – pointing out that ‘we consider the risk of change to be so dangerous because it is evident that a wide range of groups have great political and financial interest in electrosmog being considered hazardous by society’.

Brauner’s prescient account of the politics of risk is based on a useful distinction between health risk and liability risk. Regardless of whether mobile phones are a health risk, cultural mistrust of new technology could still turn them into a liability risk. Put bluntly, if society wants to label electromagnetic fields from mobile phones a cause of illness, they will be labelled a cause of illness.

Even the perception that mobile phones are dangerous is seen as a potential health problem. New Zealand psychologist Ivan Beale, a leading anti-phone mast campaigner, argues that courts shouldn’t just rely on physical evidence when making a ruling about where mobile phone masts should be built. He argues that phone masts can constitute a health risk even if they don’t emit harmful radiation: ‘When exposure is invisible, as is the case with electromagnetic fields, the mere possibility of exposure is threat enough to produce fear, and fear leads to illness.’

In the UK, the Independent Expert Group on Mobile Phones (4) led by Sir William Stewart made concessions to the approach taken by Beale – claiming that people’s wellbeing can be compromised if their concerns about the risks of radiation from phone masts are ignored. The Stewart report claimed that the public’s frustration at being excluded from the decision-making process on phone masts ‘has negative effects on people’s health and wellbeing’. From a biomedical perspective, the redefinition of frustration as a health risk makes little sense. But as a statement about the mood of our time, it affirms the risk-averse social values that prevail today.

Society’s difficulty with managing risk is driven by a culture of safety that sees vulnerability as our defining condition. That is why contemporary culture regards the word ‘accident’ as politically incorrect. In Britain and America, public health organisations want to phase the word out – claiming that most injuries are preventable, and that calling them ‘accidents’ is irresponsible. In 2001, the British Medical Journal declared that it had banned the word accident from its pages, arguing that even hurricanes, earthquakes and avalanches are often predictable events that the authorities could warn us to avoid (5). Some child professionals insist that we should refer to a youngster’s bruised knee as a ‘preventable injury’, rather than an accident.

Such changes in medical terminology often reflect new cultural attitudes. Safety has become one of Western society’s fundamental values, and people find it difficult to accept that some injuries cannot be prevented. An injury caused by an accident is an affront to a culture that believes safety is its own reward. We find it hard to deal with uncertainty, partly due to the great progress made by medicine and science. Because we have so much knowledge, a chance occurrence is hard to accept – especially if it causes injury. So if two or three people who live near each other seem to suffer a similar illness, we demand an explanation. Local campaigns against mobile phone masts are often driven by a conviction that inexplicable illnesses in the area must have been caused by this new technology.

The idea that we should be immunised against accidents is reaching pathological proportions. Soldiers are currently suing the UK Ministry of Defence for failing to prepare them for the horrors of war. This might seem ridiculous, but it makes sense in a culture that is uncomfortable with misfortune. How long before the fire service is sued for failing to tell its workers that fire is hot?

In today’s culture of safety, risk management is continually driven towards engaging with all kinds of theoretical risks. The ‘What if…?’ question dominates today – ‘What if an asteroid collides with Earth?’. ‘The end is nigh’ is no longer a warning issued by religious fanatics. In September 2001, scholars at the British Association Science Festival in Glasgow raised concerns about how a bizarre subatomic particle created through an atom-smashing experiment could potentially fall into the centre of the Earth and start eating the planet from inside out (6).

Since 11 September, all these trends have acquired an unprecedented momentum. In the post-11 September era, it often feels as though the world has been transformed into one big Hollywood blockbuster. Almost every area of life is a potential terrorist target – with scare stories about the threat of a smallpox outbreak, and bioterrorists infecting food supplies and water reservoirs (7). Leading US consumer activist Ralph Nader warns that if an aeroplane was to hit a nuclear power station, the meltdown could contaminate an area ‘the size of Pennsylvania’.

The prize for the best storyline goes to the Washington-based Worldwatch Institute – which raised the alarm about the ‘Bioterror in your burger’ (8). The Institute claims that meat-processing plants are especially vulnerable to attack, warning that terrorists could contaminate a huge amount of store-ready meat with E coli, salmonella or listeria.

You won’t be surprised to hear that Hollywood producers are busy commissioning scripts with a terrorism storyline. But what is astonishing is that the US army has turned to Hollywood for help with its war on terrorism. Since 11 September, some of the top military brass have met with filmmakers to brainstorm about possible future terrorist attacks.

US intelligence specialists have also sought advice from the movie men on how to manage terrorist attacks. Steven de Souza, who wrote Die Hard, and Joseph Zito, director of Delta Force One and Missing in Action, were among those reported to have attended the brainstorming sessions. According to Richard Lindheim of the Institute for Creative Technologies (ICT), the US military ‘wants to think differently’: ‘The reason I believe the army asked the ICT to create a group from the entertainment industry is because they wanted to think outside the box.’ (9)

The preoccupation with ‘thinking outside the box’ continually leads to the ‘What if…?’ question. What if a chemical factory becomes a terrorist target? What if a train carrying nuclear fuel is hijacked? What if a toxic biological substance infects the water supply?

Even the outbreak of foot-and-mouth disease in the UK has been linked to potential terrorist attacks. In September 2001, Sir William Stewart, a former government chief scientific adviser, warned that the difficulty the New Labour government had in dealing with the foot-and-mouth outbreak showed just how vulnerable Britain was to future threats from biological warfare (10). The ease with which Sir William made the conceptual jump from the crisis of British farming to the spectre of biological warfare shows how the contemporary sense of vulnerability helps transform problems into potential terrorist risks.

UK politicians were quick to agree with Sir William. A report published by the Select Committee on Defence stated that the ‘recent foot-and-mouth epidemic has demonstrated’ that ‘controlling the spread of some viruses is very difficult’, which ‘may suggest that the threat of biological attack is more serious’ (11). According to one US commentator, ‘Unleashing the foot-and-mouth virus’ could be as ‘simple as walking around on a US hog farm in boots worn on an infected British farm’.

Since 11 September, speculating about risk is represented as sound risk management. The aftermath of 11 September has given legitimacy to the principle of precaution, with risk increasingly seen as something you suffer from, rather than something you manage. Of course, taking sensible precautions makes a lot of sense. But continually imagining the worst possible outcome is not an effective way to deal with problems. Allowing speculation to dominate how we think about risks may even distract us from tackling the everyday problems and hazards that confront society.

We don’t need any more Hollywood-style brainstorming. We need a grown-up discussion about our post-11 September world, based on a reasoned evaluation of all the available evidence rather than on irrational fears for the future.

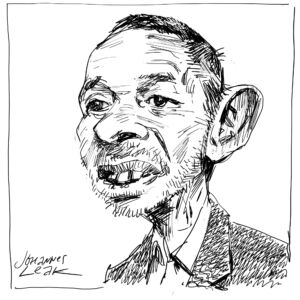

Frank Furedi is professor of sociology at the University of Kent. His books include:

- Where Have All the Intellectuals Gone?: Confronting Twenty-First Century Philistinism (Continuum International Publishing Group, 2004)

Buy this book from Amazon (UK) or Amazon (USA)

Buy this book from Amazon (UK) or Amazon (USA)

Buy this book from Amazon (UK) or Amazon (USA)

Buy this book from Amazon (UK) or Amazon (USA)

Visit Frank Furedi’s website

Read on:

Risk management goes global, by Christopher Coker

You can’t ban accidents, by Frank Furedi

spiked-issue: After 11 September

(1) See In praise of the Pill, by Jennie Bristow

(2) See GM food: putting fear before facts, by Tony Gilland

(3) See the Swiss Re website

(4) See the Independent Expert Group on Mobile Phones website

(5) See You can’t ban accidents, by Frank Furedi

(6) See End of the world is nigh, scientists insist, Tim Radford, Guardian, 7 September 2001

(7) See A pox on scientific debate, by Sandy Starr

(8) See The bioterror in your burger, Brian Halweil, Worldwatch Institute, 6 November 2001

(9) Hollywood on terror, Australian Broadcasting Corporation, 21 October 2001

(10) Biological warfare warning for UK, Tim Radford, Guardian, 3 September 2001

(11) Weapons of mass destruction and national missile defence system, Select Committee on Defence

Who funds spiked? You do

We are funded by you. And in this era of cancel culture and advertiser boycotts, we rely on your donations more than ever. Seventy per cent of our revenue comes from our readers’ donations – the vast majority giving just £5 per month. If you make a regular donation – of £5 a month or £50 a year – you can become a and enjoy:

–Ad-free reading

–Exclusive events

–Access to our comments section

It’s the best way to keep spiked going – and growing. Thank you!

Comments

Want to join the conversation?

Only spiked supporters and patrons, who donate regularly to us, can comment on our articles.